2. Bootstrapping Phases

Greg Lehey suggested this bootstrapping method. It uses knowledge of how Vinum internally allocates disk space to avoid copying data. Instead, Vinum objects are configured so that they occupy the same disk space where /stand/sysinstall built filesystems. The filesystems are thus embedded within Vinum objects without copying.

There are several distinct phases to the Vinum bootstrapping procedure. Each of these phases is presented in a separate section below. The section starts with a general overview of the phase and its goals. It then gives example steps for the two-spindle scenario presented here and advice on how to adapt them for your server. (If you are reading for a general understanding of Vinum bootstrapping, the example sections for each phase can safely be skipped.) The remainder of this section gives an overview of the entire bootstrapping process.

Phase 1 involves planning and preparation. We will balance requirements for the server against available resources and make design tradeoffs. We will plan the transition from no Vinum to Vinum on just one spindle, to Vinum on two spindles.

In phase 2, we will install a minimum FreeBSD system on a single spindle using partitions of type 4.2BSD (regular UFS filesystems).

Phase 3 will embed the non-root filesystems from phase 2 in Vinum objects. Note that Vinum will be up and running at this point, but it cannot yet provide any resilience since it only has one spindle on which to store data.

Finally in phase 4, we configure Vinum on a second spindle and make a backup copy of the root filesystem. This will give us resilience on all filesystems.

2.1. Bootstrapping Phase 1: Planning and Preparation

Our goal in this phase is to define the different partitions we will need and examine their requirements. We will also look at available disk drives and controllers and allocate partitions to them. Finally, we will determine the size of each partition and its use during the bootstrapping process. After this planning is complete, we can optionally prepare to use some tools that will make bootstrapping Vinum easier.

Several key questions must be answered in this planning phase:

-

What filesystem and partitions will be needed?

-

How will they be used?

-

How will we name each spindle?

-

How will the partitions be ordered for each spindle?

-

How will partitions be assigned to the spindles?

-

How will partitions be configured? Resilience or performance?

-

What technique will be used to achieve resilience?

-

What spindles will be used?

-

How will they be configured on the available controllers?

-

How much space is required for each partition?

2.1.1. Phase 1 Example

In this example, I will assume a scenario where we are building a minimal foundation for a failure-resilient server. Hence we will need at least root, /usr, /home, and swap partitions. The root, /usr, and /home filesystems all need resilience since the server will not be much good without them. The swap partition needs performance first and generally does not need resilience since nothing it holds needs to be retained across a reboot.

2.1.1.1. Spindle Naming

The kernel would refer to the master spindle on the primary and secondary ATA controllers as /dev/ad0 and /dev/ad2 respectively. [1] But Vinum also needs to have a name for each spindle that will stay the same name regardless of how it is attached to the CPU (i.e., if the drive moves, the Vinum name moves with the drive).

Some recovery techniques documented below suggest moving a spindle from the secondary ATA controller to the primary ATA controller. (Indeed, the flexibility of making such moves is a key benefit of Vinum especially if you are managing a large number of spindles.) After such a drive/controller swap, the kernel will see what used to be /dev/ad2 as /dev/ad0 but Vinum will still call it by whatever name it had when it was attached to /dev/ad2 (i.e., when it was “created” or first made known to Vinum).

Since connections can change, it is best to give each spindle a unique, abstract name that gives no hint of how it is attached. Avoid names that suggest a manufacturer, model number, physical location, or membership in a sequence (e.g. avoid names like upper, lower, etc., alpha, beta, etc., SCSI1, SCSI2, etc., or Seagate1, Seagate2 etc.). Such names are likely to lose their uniqueness or get out of sequence someday even if they seem like great names today.

Tip: Once you have picked names for your spindles, label them with a permanent marker. If you have hot-swappable hardware, write the names on the sleds in which the spindles are mounted. This will significantly reduce the likelihood of error when you are moving spindles around later as part of failure recovery or routine system management procedures.

In the instructions that follow, Vinum will name the root spindle YouCrazy and the rootback spindle UpWindow. I will only use /dev/ad0 when I want to refer to whichever of the two spindles is currently attached as /dev/ad0.

2.1.1.2. Partition Ordering

Modern disk drives operate with fairly uniform areal density across the surface of the disk. That implies that more data is available under the heads without seeking on the outer cylinders than on the inner cylinders. We will allocate partitions most critical to system performance from these outer cylinders as /stand/sysinstall generally does.

The root filesystem is traditionally the outermost, even though it generally is not as critical to system performance as others. (However root can have a larger impact on performance if it contains /tmp and /var as it does in this example.) The FreeBSD boot loaders assume that the root filesystem lives in the a partition. There is no requirement that the a partition start on the outermost cylinders, but this convention makes it easier to manage disk labels.

Swap performance is critical so it comes next on our way toward the center. I/O operations here tend to be large and contiguous. Having as much data under the heads as possible avoids seeking while swapping.

With all the smaller partitions out of the way, we finish up the disk with /home and /usr. Access patterns here tend not to be as intense as for other filesystems (especially if there is an abundant supply of RAM and read cache hit rates are high).

If the pair of spindles you have are large enough to allow for more than /home and /usr, it is fine to plan for additional filesystems here.

2.1.1.3. Assigning Partitions to Spindles

We will want to assign partitions to these spindles so that either can fail without loss of data on filesystems configured for resilience.

Reliability on /usr and /home is best achieved using Vinum mirroring. Resilience will have to come differently, however, for the root filesystem since Vinum is not a part of the FreeBSD boot sequence. Here we will have to settle for two identical partitions with a periodic copy from the primary to the backup secondary.

The kernel already has support for interleaved swap across all available partitions so there is no need for help from Vinum here. /stand/sysinstall will automatically configure /etc/fstab for all swap partitions given.

The Vinum bootstrapping method given below requires a pair of spindles that I will call the root spindle and the rootback spindle.

Important: The rootback spindle must be the same size or larger than the root spindle.

These instructions first allocate all space on the root spindle and then allocate exactly that amount of space on a rootback spindle. (After Vinum is bootstrapped, there is nothing special about either of these spindles--they are interchangeable.) You can later use the remaining space on the rootback spindle for other filesystems.

If you have more than two spindles, the bootvinum Perl script and the procedure below will help you initialize them for use with Vinum. However you will have to figure out how to assign partitions to them on your own.

2.1.1.4. Assigning Space to Partitions

For this example, I will use two spindles: one with 4,124,673 blocks (about 2 GB) on /dev/ad0 and one with 8,420,769 blocks (about 4 GB) on /dev/ad2.

It is best to configure your two spindles on separate controllers so that both can operate in parallel and so that you will have failure resilience in case a controller dies. Note that mirrored volume write performance will be halved in cases where both spindles share a controller that requires they operate serially (as is often the case with ATA controllers). One spindle will be the master on the primary ATA controller and the other will be the master on the secondary ATA controller.

Recall that we will be allocating space on the smaller spindle first and the larger spindle second.

2.1.1.5. Assigning Partitions on the Root Spindle

We will allocate 200,000 blocks (about 93 MB) for a root filesystem on each spindle (/dev/ad0s1a and /dev/ad2s1a). We will initially allocate 200,265 blocks for a swap partition on each spindle, giving a total of about 186 MB of swap space (/dev/ad0s1b and /dev/ad2s1b).

Note: We will lose 265 blocks from each swap partition as part of the bootstrapping process. This is the size of the space used by Vinum to store configuration information. The space will be taken from swap and given to a vinum partition but will be unavailable for Vinum subdisks.

Note: I have done the partition allocation in nice round numbers of blocks just to emphasize where the 265 blocks go. There is nothing wrong with allocating space in MB if that is more convenient for you.

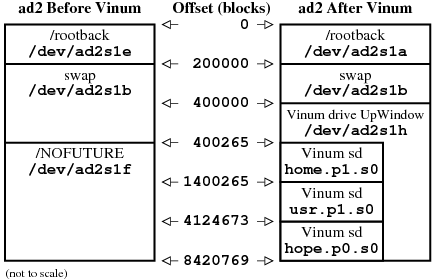

This leaves 4,124,673 - 200,000 - 200,265 = 3,724,408 blocks (about 1,818 MB) on the root spindle for Vinum partitions (/dev/ad0s1e and /dev/ad2s1f). From this, allocate the 265 blocks for Vinum configuration information, 1,000,000 blocks (about 488 MB) for /home, and the remaining 2,724,408 blocks (about 1,330 MB) for /usr. See Figure 2 below to see this graphically.

The left-hand side of Figure 2 below shows what spindle ad0 will look like at the end of phase 2. The right-hand side shows what it will look like at the end of phase 3.

2.1.1.6. Assigning Partitions on the Rootback Spindle

The /rootback and swap partition sizes on the rootback spindle must match the root and swap partition sizes on the root spindle. That leaves 8,420,769 - 200,000 - 200,265 = 8,020,504 blocks for the Vinum partition. Mirrors of /home and /usr receive the same allocation as on the root spindle. That will leave an extra 2 GB or so that we can deal with later. See Figure 3 below to see this graphically.

The left-hand side of Figure 3 below shows what spindle ad2 will look like at the beginning of phase 4. The right-hand side shows what it will look like at the end.

2.1.1.7. Preparation of Tools

The bootvinum Perl script given below in Appendix A will make the Vinum bootstrapping process much easier if you can run it on the machine being bootstrapped. It is over 200 lines and you would not want to type it in. At this point, I recommend that you copy it to a floppy or arrange some alternative method of making it readily available so that it can be available later when needed. For example:

# fdformat -f 1440 /dev/fd0 # newfs_msdos -f 1440 /dev/fd0 # mount_msdos /dev/fd0 /mnt # cp /usr/share/examples/vinum/bootvinum /mnt

XXX Someday, I would like this script to live in /usr/share/examples/vinum. Till then, please use this link to get a copy.

2.2. Bootstrapping Phase 2: Minimal OS Installation

Our goal in this phase is to complete the smallest possible FreeBSD installation in such a way that we can later install Vinum. We will use only partitions of type 4.2BSD (i.e., regular UFS file systems) since that is the only type supported by /stand/sysinstall.

2.2.1. Phase 2 Example

-

Start up the FreeBSD installation process by running /stand/sysinstall from installation media as you normally would.

-

Fdisk partition all spindles as needed.

Important: Make sure to select BootMgr for all spindles.

-

Partition the root spindle with appropriate block allocations as described above in Section 2.1.1.5. For this example on a 2 GB spindle, I will use 200,000 blocks for root, 200,265 blocks for swap, 1,000,000 blocks for /home, and the rest of the spindle (2,724,408 blocks) for /usr. (/stand/sysinstall should automatically assign these to /dev/ad0s1a, /dev/ad0s1b, /dev/ad0s1e, and /dev/ad0s1f by default.)

Note: If you prefer Soft Updates as I do and you are using 4.4-RELEASE or better, this is a good time to enable them.

-

Partition the rootback spindle with the appropriate block allocations as described above in Section 2.1.1.6. For this example on a 4 GB spindle, I will use 200,000 blocks for /rootback, 200,265 blocks for swap, and the rest of the spindle (8,020,504 blocks) for /NOFUTURE. (/stand/sysinstall should automatically assign these to /dev/ad2s1e, /dev/ad2s1b, and /dev/ad2s1f by default.)

Note: We do not really want to have a /NOFUTURE UFS filesystem (we want a vinum partition instead), but that is the best choice we have for the space given the limitations of /stand/sysinstall. Mount point names beginning with NOFUTURE and rootback serve as sentinels to the bootstrapping script presented in Appendix A below.

-

Partition any other spindles with swap if desired and a single /NOFUTURExx filesystem.

-

Select a minimum system install for now even if you want to end up with more distributions loaded later.

Tip: Do not worry about system configuration options at this point--get Vinum set up and get the partitions in the right places first.

-

Exit /stand/sysinstall and reboot. Do a quick test to verify that the minimum installation was successful.

The left-hand side of Figure 2 above and the left-hand side of Figure 3 above show how the disks will look at this point.

2.3. Bootstrapping Phase 3: Root Spindle Setup

Our goal in this phase is get Vinum set up and running on the root spindle. We will embed the existing /usr and /home filesystems in a Vinum partition. Note that the Vinum volumes created will not yet be failure-resilient since we have only one underlying Vinum drive to hold them. The resulting system will automatically start Vinum as it boots to multi-user mode.

2.3.1. Phase 3 Example

-

Login as root.

-

We will need a directory in the root filesystem in which to keep a few files that will be used in the Vinum bootstrapping process.

# mkdir /bootvinum # cd /bootvinum

-

Several files need to be prepared for use in bootstrapping. I have written a Perl script that makes all the required files for you. Copy this script to /bootvinum by floppy disk, tape, network, or any convenient means and then run it. (If you cannot get this script copied onto the machine being bootstrapped, then see Appendix B below for a manual alternative.)

# cp /mnt/bootvinum . # ./bootvinum

Note: bootvinum produces no output when run successfully. If you get any errors, something may have gone wrong when you were creating partitions with /stand/sysinstall above.

Running bootvinum will:

-

Create /etc/fstab.vinum based on what it finds in your existing /etc/fstab

-

Create new disk labels for each spindle mentioned in /etc/fstab and keep copies of the current disk labels

-

Create files needed as input to vinum

createfor building Vinum objects on each spindle -

Create many alternates to /etc/fstab.vinum that might come in handy should a spindle fail

You may want to take a look at these files to learn more about the disk partitioning required for Vinum or to learn more about the commands needed to create Vinum objects.

-

-

We now need to install new spindle partitioning for /dev/ad0. This requires that /dev/ad0s1b not be in use for swapping so we have to reboot in single-user mode.

-

First, reboot the system.

# reboot

-

Next, enter single-user mode.

Hit [Enter] to boot immediately, or any other key for command prompt. Booting [kernel] in 8 seconds... Type '?' for a list of commands, 'help' for more detailed help. ok boot -s

-

-

In single-user mode, install the new partitioning created above.

# cd /bootvinum # disklabel -R ad0s1 disklabel.ad0s1 # disklabel -R ad2s1 disklabel.ad2s1

Note: If you have additional spindles, repeat the above commands as appropriate for them.

-

We are about to start Vinum for the first time. It is going to want to create several device nodes under /dev/vinum so we will need to mount the root filesystem for read/write access.

# fsck -p / # mount /

-

Now it is time to create the Vinum objects that will embed the existing non-root filesystems on the root spindle in a Vinum partition. This will load the Vinum kernel module and start Vinum as a side effect.

# vinum create create.YouCrazy

You should see a list of Vinum objects created that looks like the following:

1 drives: D YouCrazy State: up Device /dev/ad0s1h Avail: 0/1818 MB (0%) 2 volumes: V home State: up Plexes: 1 Size: 488 MB V usr State: up Plexes: 1 Size: 1330 MB 2 plexes: P home.p0 C State: up Subdisks: 1 Size: 488 MB P usr.p0 C State: up Subdisks: 1 Size: 1330 MB 2 subdisks: S home.p0.s0 State: up PO: 0 B Size: 488 MB S usr.p0.s0 State: up PO: 0 B Size: 1330 MB

You should also see several kernel messages which state that the Vinum objects you have created are now up.

-

Our non-root filesystems should now be embedded in a Vinum partition and hence available through Vinum volumes. It is important to test that this embedding worked.

# fsck -n /dev/vinum/home # fsck -n /dev/vinum/usr

This should produce no errors. If it does produce errors do not fix them. Instead, go back and examine the root spindle partition tables before and after Vinum to see if you can spot the error. You can back out the partition table changes by using disklabel -R with the disklabel.*.b4vinum files.

-

While we have the root filesystem mounted read/write, this is a good time to install /etc/fstab.

# mv /etc/fstab /etc/fstab.b4vinum # cp /etc/fstab.vinum /etc/fstab

-

We are now done with tasks requiring single-user mode, so it is safe to go multi-user from here on.

# ^D

-

Login as root.

-

Edit /etc/rc.conf and add this line:

start_vinum="YES"

2.4. Bootstrapping Phase 4: Rootback Spindle Setup

Our goal in this phase is to get redundant copies of all data from the root spindle to the rootback spindle. We will first create the necessary Vinum objects on the rootback spindle. Then we will ask Vinum to copy the data from the root spindle to the rootback spindle. Finally, we use dump and restore to copy the root filesystem.

2.4.1. Phase 4 Example

-

Now that Vinum is running on the root spindle, we can bring it up on the rootback spindle so that our Vinum volumes can become failure-resilient.

# cd /bootvinum # vinum create create.UpWindow

You should see a list of Vinum objects created that looks like the following:

2 drives: D YouCrazy State: up Device /dev/ad0s1h Avail: 0/1818 MB (0%) D UpWindow State: up Device /dev/ad2s1h Avail: 2096/3915 MB (53%) 2 volumes: V home State: up Plexes: 2 Size: 488 MB V usr State: up Plexes: 2 Size: 1330 MB 4 plexes: P home.p0 C State: up Subdisks: 1 Size: 488 MB P usr.p0 C State: up Subdisks: 1 Size: 1330 MB P home.p1 C State: faulty Subdisks: 1 Size: 488 MB P usr.p1 C State: faulty Subdisks: 1 Size: 1330 MB 4 subdisks: S home.p0.s0 State: up PO: 0 B Size: 488 MB S usr.p0.s0 State: up PO: 0 B Size: 1330 MB S home.p1.s0 State: stale PO: 0 B Size: 488 MB S usr.p1.s0 State: stale PO: 0 B Size: 1330 MB

You should also see several kernel messages which state that some of the Vinum objects you have created are now up while others are faulty or stale.

-

Now we ask Vinum to copy each of the subdisks on drive YouCrazy to drive UpWindow. This will change the state of the newly created Vinum subdisks from stale to up. It will also change the state of the newly created Vinum plexes from faulty to up.

First, we do the new subdisk we added to /home.

# vinum start -w home.p1.s0 reviving home.p1.s0 (time passes . . . ) home.p1.s0 is up by force home.p1 is up home.p1.s0 is upNote: My 5,400 RPM EIDE spindles copied at about 3.5 MBytes/sec. Your mileage may vary.

-

Next we do the new subdisk we added to /usr.

# vinum start -w usr.p1.s0 reviving usr.p1.s0 (time passes . . . ) usr.p1.s0 is up by force usr.p1 is up usr.p1.s0 is upAll Vinum objects should be in state up at this point. The output of vinum list should look like the following:

2 drives: D YouCrazy State: up Device /dev/ad0s1h Avail: 0/1818 MB (0%) D UpWindow State: up Device /dev/ad2s1h Avail: 2096/3915 MB (53%) 2 volumes: V home State: up Plexes: 2 Size: 488 MB V usr State: up Plexes: 2 Size: 1330 MB 4 plexes: P home.p0 C State: up Subdisks: 1 Size: 488 MB P usr.p0 C State: up Subdisks: 1 Size: 1330 MB P home.p1 C State: up Subdisks: 1 Size: 488 MB P usr.p1 C State: up Subdisks: 1 Size: 1330 MB 4 subdisks: S home.p0.s0 State: up PO: 0 B Size: 488 MB S usr.p0.s0 State: up PO: 0 B Size: 1330 MB S home.p1.s0 State: up PO: 0 B Size: 488 MB S usr.p1.s0 State: up PO: 0 B Size: 1330 MB

-

Copy the root filesystem so that you will have a backup.

# cd /rootback # dump 0f - / | restore rf - # rm restoresymtable # cd /

Note: You may see errors like this:

./tmp/rstdir1001216411: (inode 558) not found on tape cannot find directory inode 265 abort? [yn] n expected next file 492, got 491

They seem to cause no harm. I suspect they are a consequence of dumping the filesystem containing /tmp and/or the pipe connecting dump and restore.

-

Make a directory on which we can mount a damaged root filesystem during the recovery process.

# mkdir /rootbad

-

Remove sentinel mount points that are now unused.

# rmdir /NOFUTURE*

-

Create empty Vinum drives on remaining spindles.

# vinum create create.ThruBank # ...

At this point, the reliable server foundation is complete. The right-hand side of Figure 2 above and the right-hand side of Figure 3 above show how the disks will look.

You may want to do a quick reboot to multi-user and give it a quick test drive. This is also a good point to complete installation of other distributions beyond the minimal install. Add packages, ports, and users as required. Configure /etc/rc.conf as required.

Tip: After you have completed your server configuration, remember to do one more copy of root to /rootback as shown above before placing the server into production.

Tip: Make a schedule to refresh /rootback periodically.

Tip: It may be a good idea to mount /rootback read-only for normal operation of the server. This does, however, complicate the periodic refresh a bit.

Tip: Do not forget to watch /var/log/messages carefully for errors. Vinum may automatically avoid failed hardware in a way that users do not notice. You must watch for such failures and get them repaired before a second failure results in data loss. You may see Vinum noting damaged objects at server boot time.