FreeBSD Developers' Handbook

FreeBSD 文件計畫

Copyright © 2000, 2001, 2002, 2003, 2004, 2005, 2006, 2007 The FreeBSD Documentation Project

歡迎使用 Developers' Handbook! 這份文件是由許多人 不斷撰寫 而成的, 而且許多章節仍需更新或者內容還是一片空白, 如果你想幫忙 FreeBSD 文件計劃, 請寄信到 FreeBSD documentation project 郵遞論壇。

最新版的文件都在 FreeBSD 官網 上面, 也可從 FreeBSD FTP server 下載不同格式的資料。 當然也可以在其他的 mirror站下載。

Redistribution and use in source (SGML DocBook) and 'compiled' forms (SGML, HTML, PDF, PostScript, RTF and so forth) with or without modification, are permitted provided that the following conditions are met:

-

Redistributions of source code (SGML DocBook) must retain the above copyright notice, this list of conditions and the following disclaimer as the first lines of this file unmodified.

-

Redistributions in compiled form (transformed to other DTDs, converted to PDF, PostScript, RTF and other formats) must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

Important: THIS DOCUMENTATION IS PROVIDED BY THE FREEBSD DOCUMENTATION PROJECT "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE FREEBSD DOCUMENTATION PROJECT BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS DOCUMENTATION, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

FreeBSD 是 FreeBSD基金會的註冊商標

Apple, AirPort, FireWire, Mac, Macintosh, Mac OS, Quicktime, 以及 TrueType 是 Apple Computer, Inc. 在美國以及其他國家的註冊商標。

IBM, AIX, EtherJet, Netfinity, OS/2, PowerPC, PS/2, S/390, 和 ThinkPad 是 國際商用機器公司在美國和其他國家的註冊商標或商標。

IEEE, POSIX, 和 802 是 Institute of Electrical and Electronics Engineers, Inc. 在美國的註冊商標。

Intel, Celeron, EtherExpress, i386, i486, Itanium, Pentium, 和 Xeon 是 Intel Corporation 及其分支機構在美國和其他國家的商標或註冊商標。

Linux 是 Linus Torvalds 的註冊商標。

Microsoft, IntelliMouse, MS-DOS, Outlook, Windows, Windows Media, 和 Windows NT 是 Microsoft Corporation 在美國和/或其他國家的商標或註冊商標。

Motif, OSF/1, 和 UNIX 是 The Open Group 在美國和其他國家的註冊商標; IT DialTone 和 The Open Group 是其商標。

Sun, Sun Microsystems, Java, Java Virtual Machine, JavaServer Pages, JDK, JSP, JVM, Netra, Solaris, StarOffice, Sun Blade, Sun Enterprise, Sun Fire, SunOS, 和 Ultra 是 Sun Microsystems, Inc. 在美國和其他國家的商標或註冊商標。

許多製造商和經銷商使用一些稱為商標的圖案或文字設計來彰顯自己的產品。 本文中出現的眾多商標,以及 FreeBSD Project 本身廣所人知的商標,後面將以 '™' 或 '®' 符號來標註。

- Table of Contents

- I. 基本概念

-

- 1 簡介

-

- 1.1 在 FreeBSD 開發程式

- 1.2 The BSD Vision

- 1.3 程式架構指南

- 1.4 /usr/src 的架構

- 2 程式開發工具

-

- 2.1 概敘

- 2.2 簡介

- 2.3 Programming 概念

- 2.4 用 cc 來編譯程式

- 2.5 Make

- 2.6 Debugging

- 2.7 Using Emacs as a Development Environment

- 2.8 Further Reading

- 3 Secure Programming

-

- 3.1 Synopsis

- 3.2 Secure Design Methodology

- 3.3 Buffer Overflows

- 3.4 SetUID issues

- 3.5 Limiting your program's environment

- 3.6 Trust

- 3.7 Race Conditions

- 4 Localization and Internationalization - L10N and I18N

- 5 Source Tree Guidelines and Policies

-

- 5.1 MAINTAINER on Makefiles

- 5.2 Contributed Software

- 5.3 Encumbered Files

- 5.4 Shared Libraries

- 6 Regression and Performance Testing

- II. Interprocess Communication(IPC)

-

- 7 Sockets

-

- 7.1 Synopsis

- 7.2 Networking and Diversity

- 7.3 Protocols

- 7.4 The Sockets Model

- 7.5 Essential Socket Functions

- 7.6 Helper Functions

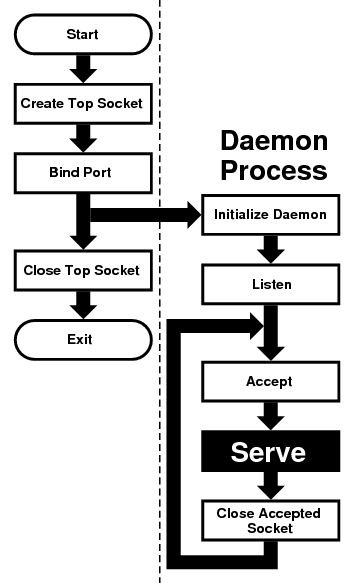

- 7.7 Concurrent Servers

- 8 IPv6 Internals

- III. Kernel(核心)

-

- 9 DMA

- 10 Kernel Debugging

-

- 10.1 Obtaining a Kernel Crash Dump

- 10.2 Debugging a Kernel Crash Dump with kgdb

- 10.3 Debugging a Crash Dump with DDD

- 10.4 Post-Mortem Analysis of a Dump

- 10.5 On-Line Kernel Debugging Using DDB

- 10.6 On-Line Kernel Debugging Using Remote GDB

- 10.7 Debugging Loadable Modules Using GDB

- 10.8 Debugging a Console Driver

- 10.9 Debugging the Deadlocks

- IV. Architectures(電腦架構)

-

- 11 x86 Assembly Language Programming

-

- 11.1 Synopsis

- 11.2 The Tools

- 11.3 System Calls

- 11.4 Return Values

- 11.5 Creating Portable Code

- 11.6 Our First Program

- 11.7 Writing UNIX® Filters

- 11.8 Buffered Input and Output

- 11.9 Command Line Arguments

- 11.10 UNIX Environment

- 11.11 Working with Files

- 11.12 One-Pointed Mind

- 11.13 Using the FPU

- 11.14 Caveats

- 11.15 Acknowledgements

- V. 附錄

- List of Examples

- 2-1. A sample .emacs file

I. 基本概念

Chapter 1 簡介

Contributed by Murray Stokely and Jeroen Ruigrok van der Werven.1.1 在 FreeBSD 開發程式

好了我們開始吧!我想你的 FreeBSD 已經安裝好了,而且已經準備好要用它寫點程式了吧? 但是要從哪裡開始呢?FreeBSD 有提供寫程式的程式或環境嗎? 身為 programer 的我可以做什麼呢?

本章試著回答你一些問題,當然,單就 programming 程度來說可分很多種層次, 有的人只是單純當興趣,有的則是他的專業, 本章主要內容是針對程式初學者, 當然,對於那些不熟 FreeBSD 的程式開發者而言,本文件內容也是十分實用的。

1.2 The BSD Vision

為了讓你寫出來的程式在 UNIX® like系統上具有良好的使用性、效能和穩定性, 我們必須跟你介紹一些程式概念(original software tools ideology)。

1.3 程式架構指南

我們想介紹的概念如下

-

在整個程式還沒寫完前,不要增加新的功能。

-

另外一個重點就是,讓你自己選擇你的程式將會具有何種功能, 而不是讓別人決定,不想要去滿足全世界的需求,除非你想讓你的程式具有擴充性或相容性。

-

千萬記住:在沒有相關經驗時,參考範例程式碼所寫出來的程式, 會比自己憑空寫出來的好。

-

當你寫的程式沒辦法完全解決問題時,最好的方法就是不要試著要去解決它。

-

若用 10% 的心力就能輕鬆完成 90% 的工作份量,就用這個簡單法子吧。

-

盡可能地簡化問題的複雜。

-

提供機制(mechanism),而非原則(policy)。 比方說,把使用者介面選擇權交由使用者來決定。

以上摘自 Scheifler & Gettys 的 "X Window System" 論文

1.4 /usr/src 的架構

完整的 FreeBSD 原始碼都在公開的 CVS repository 中。 通常 FreeBSD 原始碼都會裝在 /usr/src, 而且包含下列子目錄:

| Directory | Description |

|---|---|

| bin/ | Source for files in /bin |

| contrib/ | Source for files from contributed software. |

| crypto/ | Cryptographical sources |

| etc/ | Source for files in /etc |

| games/ | Source for files in /usr/games |

| gnu/ | Utilities covered by the GNU Public License |

| include/ | Source for files in /usr/include |

| kerberos5/ | Source for Kerberos version 5 |

| lib/ | Source for files in /usr/lib |

| libexec/ | Source for files in /usr/libexec |

| release/ | Files required to produce a FreeBSD release |

| rescue/ | Build system for the /rescue utilities |

| sbin/ | Source for files in /sbin |

| secure/ | FreeSec sources |

| share/ | Source for files in /usr/share |

| sys/ | Kernel source files |

| tools/ | Tools used for maintenance and testing of FreeBSD |

| usr.bin/ | Source for files in /usr/bin |

| usr.sbin/ | Source for files in /usr/sbin |

Chapter 2 程式開發工具

Contributed by James Raynard and Murray Stokely.2.1 概敘

本章將介紹如何使用一些 FreeBSD 所提供的程式開發工具(programing tools), 本章所介紹的工具程式在其他版本的 UNIX 上也可使用, 在此 並不會 嘗試描述寫程式時的每個細節, 本章大部分篇幅都是假設你以前沒有或只有少數的寫程式經驗, 不過,還是希望大多數的程式開發人員都能從中重新得到一些啟發。

2.2 簡介

FreeBSD 提供一個非常棒的開發環境, 比如說像是 C、C++、Fortran 和 assembler(組合語言)的編譯器(compiler), 在 FreeBSD 中都已經包含在基本的系統中了 更別提 Perl 和其他標準 UNIX 工具,像是sed 以及 awk, 如果你還是覺得不夠,FreeBSD在 Ports collection 中還提供其他的編譯器和直譯器(interpreter), FreeBSD 相容許多標準,像是 POSIX® 和 ANSI C, 當然還有它所繼承的 BSD 傳統。 所以在 FreeBSD 上寫的程式不需修改或頂多稍微修改,就可以在許多平台上編譯、執行。

無論如何,就算你從來沒在 UNIX 平台上寫過程式,也可以徹底感受到FreeBSD 令人無法抗拒的迷人魔力。 本章的目標就是協助你快速上手,而暫時不需深入太多進階主題, 並且講解一些基礎概念,以讓你可以瞭解我們在講些什麼。

本章內容並不要求你得有程式開發經驗,或者你只有一點點的經驗而已。 不過,我們假設你已經會 UNIX 系統的基本操作, 而且更重要的是,請保持樂於學習的心態!

2.3 Programming 概念

簡單的說,程式只是一堆指令的集合體;而這些指令是用來告訴電腦應該要作那些事情。 有時候,指令的執行取決於前一個指令的結果而定。 本章將會告訴你有 2 個主要的方法,讓你可以對電腦下達這些指示(instruction) 或 “命令(commands)”。 第一個方法就是 直譯器(interpreter), 而第二個方法是 編譯器(compiler)。 由於對於電腦而言,人類語言的語意過於模糊而太難理解, 因此命令(commands)就常會以一種(或多種)程式語言寫成,用來指示電腦所要執行的特定動作為何。

2.3.1 直譯器

使用直譯器時,所使用的程式語言就像變成一個會和你互動的環境。 當在命令提示列上打上命令時,直譯器會即時執行該命令。 在比較複雜的程式中,可以把所有想下達的命令統統輸入到某檔案裡面去, 然後呼叫直譯器去讀取該檔案,並且執行你寫在這個檔案中的指令。 如果所下的指令有錯誤產生,大多數的直譯器會進入偵錯模式(debugger), 並且顯示相關錯誤訊息,以便對程式除錯。

這種方式好處在於:可以立刻看到指令的執行結果,以及錯誤也可迅速修正。 相對的,最大的壞處便是當你想把你寫的程式分享給其他人時,這些人必須要有跟你一樣的直譯器。 而且別忘了,他們也要會使用直譯器直譯程式才行。 當然使用者也不希望不小心按錯鍵,就進入偵錯模式而不知所措。 就執行效率而言,直譯器會使用到很多的記憶體, 而且這類直譯式程式,通常並不會比編譯器所編譯的程式的更有效率。

筆者個人認為,如果你之前沒有學過任何程式語言,最好先學學習直譯式語言(interpreted languages), 像是 Lisp,Smalltalk,Perl 和 Basic 都是,UNIX 的 shell 像是 sh 和 csh 它們本身就是直譯器,事實上,很多人都在它們自己機器上撰寫各式的 shell “script”, 來順利完成各項 “housekeeping(維護)” 任務。 UNIX 的使用哲學之一就是提供大量的小工具, 並使用 shell script 來組合運用這些小工具,以便工作更有效率。

2.3.2 FreeBSD 提供的直譯器

下面這邊有份 FreeBSD Ports Collection 所提供的直譯器清單,還有討論一些比較受歡迎的直譯式語言

至於如何使用 Ports Collection 安裝的說明,可參閱 FreeBSD Handbook 中的 Ports章節。

- BASIC

-

BASIC 是 Beginner's ALL-purpose Symbolic Instruction Code 的縮寫。 BASIC 於 1950 年代開始發展,最初開發這套語言的目的是為了教導當時的大學學生如何寫程式。 到了 1980,BASIC已經是很多 programmer 第一個學習的程式語言了。 此外,BASIC 也是 Visual Basic 的基礎。

FreeBSD Ports Collection 也有收錄相關的 BASIC 直譯器。 Bywater Basic 直譯器放在 lang/bwbasic。 而 Phil Cockroft's Basic 直譯器(早期也叫 Rabbit Basic)放在 lang/pbasic。

- Lisp

-

LISP 是在 1950 年代開始發展的一個直譯式語言,而且 LISP 就是一種 “number-crunching” languages(迅速進行大量運算的程式語言),在當時算是一個普遍的程式語言。 LISP 的表達不是基於數字(numbers),而是基於表(lists)。 而最能表示出 LISP 特色的地方就在於: LISP 是 “List Processing” 的縮寫。 在人工智慧(Artificial Intelligence, AI)領域上 LISP 的各式應用非常普遍。

LISP 是非常強悍且複雜的程式語言,但是缺點是程式碼會非常大而且難以操作。

絕大部分的 LISP 直譯器都可以在 UNIX 系統上運作,當然 FreeBSD 的 Ports Collection 也有收錄。 GNU Common Lisp 收錄在 lang/gcl, Bruno Haible 和 Michael Stoll 的 CLISP 收錄在 lang/clisp ,此外 CMUCL(包含一個已經最佳化的編譯器), 以及其他簡化版的 LISP 直譯器(比如以 C 語言寫的 SLisp,只用幾百行程式碼就實作大多數 Common Lisp 的功能) 則是分別收錄在 lang/cmucl 以及 lang/slisp。

- Perl

-

對系統管理者而言,最愛用 perl 來撰寫 scripts 以管理主機, 同時也經常用來寫 WWW 主機上的 CGI Script 程式。

Perl 在 Ports Collection 內的 lang/perl5。 而 FreeBSD 4.X 則是把 Perl 裝在 /usr/bin/perl。

- Scheme

-

Scheme 是 LISP 的另一分支,Scheme 的特點就是比 Common LISP 還要簡潔有力。 由於 Scheme 簡單,所以很多大學拿來當作第一堂程式語言教學教材。 而且對於研究人員來說也可以快速的開發他們所需要的程式。

Scheme 收錄在 lang/elk, Elk Scheme 直譯器(由麻省理工學院所發展的 Scheme 直譯器)收錄在 lang/mit-scheme, SCM Scheme Interpreter 收錄在 lang/scm。

- Icon

-

Icon 屬高階程式語言,Icon 具有強大的字串(String)和結構(Structure)處理能力。 FreeBSD Ports Collection 所收錄的 Icon 直譯器版本則是放在 lang/icon。

- Logo

-

Logo 是種容易學習的程式語言,最常在一些教學課程中被拿來當作開頭範例。 如果要給小朋友開始上程式語言課的話,Logo 是相當不錯的選擇。 因為,即使對小朋友來說,要用 Logo 來秀出複雜多邊形圖形是相當輕鬆容易的。

Logo 在 FreeBSD Ports Collection 的最新版則是放在 lang/logo。

- Python

-

Python 是物件導向的直譯式語言, Python 的擁護者總是宣稱 Python 是最好入門的程式語言。 雖然 Python 可以很簡單的開始,但是不代表它就會輸給其他直譯式語言(像是 Perl 和 Tcl), 事實證明 Python 也可以拿來開發大型、複雜的應用程式。

FreeBSD Ports Collection 收錄在 lang/python。

- Ruby

-

Ruby 是純物件導向的直譯式語言。 Ruby 目前非常流行,原因在於他易懂的程式語法結構,在撰寫程式時的彈性, 以及天生具有輕易的發展維護大型專案的能力。

FreeBSD Ports Collection 收錄在 lang/ruby8。

- Tcl and Tk

-

Tcl 是內嵌式的直譯式語言,讓 Tcl 可以如此廣泛運用的原因是 Tcl 的移植性。 Tcl 也可以快速發展一個簡單但是具有雛型的程式或者具有完整功能的程式。

Tcl 許多的版本都可在 FreeBSD 上運作,而最新的 Tcl 版本為 Tcl 8.4, FreeBSD Ports Collection 收錄在 lang/tcl84。

2.3.3 編譯器

編譯器和直譯器兩者相比的話,有些不同,首先就是必須先把程式碼統統寫入到檔案裡面, 然後必須執行編譯器來試著編譯程式,如果編譯器不接受所寫的程式,那就必須一直修改程式, 直到編譯器接受且把你的程式編譯成執行檔。 此外,也可以在提示命令列,或在除錯器中執行你編譯好的程式看看它是否可以運作。 [1]

很明顯的,使用編譯器並不像直譯器般可以馬上得到結果。 不管如何,編譯器允許你作很多直譯器不可能或者是很難達到的事情。 例如:撰寫和作業系統密切互動的程式,甚至是你自己寫的作業系統! 當你想要寫出高效率的程式時,編譯器便派上用場了。 編譯器可以在編譯時順便最佳化你的程式,但是直譯器卻不行。 而編譯器與直譯器最大的差別在於:當你想把你寫好的程式拿到另外一台機器上跑時, 你只要將編譯器編譯出來的可執行檔,拿到新機器上便可以執行, 而直譯器則必須要求新機器上,必須要有跟另一台機器上相同的直譯器, 才能組譯執行你的程式!

編譯式的程式語言包含 Pascal、C 和 C++, C 和 C++ 不是一個親和力十足的語言,但是很適合具有經驗的 Programmer。 Pascal 其實是一個設計用來教學用的程式語言,而且也很適合用來入門, FreeBSD 預設並沒有把 Pascal 整合進 base system 中, 但是 GNU Pascal Compiler 和 Free Pascal Compiler 都可分別在 lang/gpc 和 lang/fpc 中找到。

如果你用不同的程式來寫編譯式程式,那麼不斷地編輯-編譯-執行-除錯的這個循環肯定會很煩人, 為了更簡化、方便程式開發流程,很多商業編譯器廠商開始發展所謂的 IDE (Integrated Development Environments) 開發環境, FreeBSD 預設並沒有把 IDE 整合進 base system 中, 但是你可透過 devel/kdevelop 安裝 kdevelop 或使用 Emacs 來體驗 IDE 開發環境。 在後面的 Section 2.7 專題將介紹,如何以 Emacs 來作為 IDE 開發環境。

2.4 用 cc 來編譯程式

本章範例只有針對 GNU C compiler 和 GNU C++ compiler 作說明, 這兩個在 FreeBSD base system 中就有了, 直接打 cc 或 gcc 就可以執行。 至於,如何用直譯器產生程式的說明,通常可在直譯器的文件或線上文件找到說明,因此不再贅述。

當你寫完你的傑作後,接下來便是讓這個程式可以在 FreeBSD 上執行, 通常這些要一些步驟才能完成,有些步驟則需要不同程式來完成。

-

預先處理(Pre-process)你的程式碼,移除程式內的註解,和其他技巧, 像是 expanding(擴大) C 的 marco。

-

確認你的程式語法是否確實遵照 C/C++ 的規定,如果沒有符合的話,編譯器會出現警告。

-

將原始碼轉成組合語言 —— 它跟機器語言(machine code)非常相近,但仍在人類可理解的範圍內(據說應該是這樣)。 [2]

-

把組合語言轉成機器語言 —— 是的,這裡說的機器語言就是常提到的 bit 和 byte,也就是 1 和 0。

-

確認程式中用到的函式呼叫、全域變數是否正確,舉例來說:如若呼叫了不存在的函式,編譯器會顯示警告。

-

如果程式是由程式碼檔案來編譯,編譯器會整合起來。

-

編譯器會負責產生東西,讓系統上的 run-time loader 可以把程式載入記憶體內執行。

-

最後會把編譯完的執行檔存在硬碟上。

通常 編譯(compiling) 是指第 1 到第 4 個步驟。 —— 其他步驟則稱為 連結(linking), 有時候步驟 1 也可以是指 預先處理(pre-processing), 而步驟 3 到步驟 4 則是 組譯(assembling)。

幸運的是,你可以不用理會以上細節,編譯器都會自動完成。 因為 cc 只是是個前端程式(front end),它會依照正確的參數來呼叫相關程式幫你處理。 只需打:

% cc foobar.c

上述指令會把 foobar.c 開始編譯,並完成上述動作。 如果你有許多檔案需要編譯,那請打類似下列指令即可:

% cc foo.c bar.c

記住語法錯誤檢查就是 —— 純粹檢查語法錯誤與否, 而不會幫你檢測任何邏輯錯誤,比如:無限迴圈,或是排序方式想用 binary sort 卻弄成 bubble sort。 [3]

cc 有非常多的選項,都可透過線上手冊來查。 下面只提一些必要且重要的選項,以作為例子。

-o 檔名-

-o編譯後的執行檔檔名,如果沒有使用這選項的話, 編譯好的程式預設檔名將會是 a.out [4] -c-

使用

-c時,只會編譯原始碼,而不作連結(linking)。 當只想確認語法是否正確或使用 Makefile 來編譯程式時,這個選項非常有用。這會產生叫做 foobar 的 object file(非執行檔)。 這檔可以與其他的 object file 連結在一起,而成執行檔。

-g-

-g將會把一些給 gdb 用的除錯訊息包進去執行檔裡面,所謂的除錯訊息例如: 程式在第幾行出錯、那個程式第幾行做什麼函式呼叫等等。除錯資訊非常好用。 但缺點就是:對於程式來說,額外的除錯訊息會讓編譯出來的程式比較肥些。-g的適用時機在於:當程式還在開發時使用就好, 而當你要釋出你的 “發行版本(release version)” 或者確認程式可運作正常的話,就不必用-g這選項了。這動作會產生有含除錯訊息的執行檔。 [5]

-O-

-O會產生最佳化的執行檔, 編譯器會使用一些技巧,來讓程式可以跑的比未經最佳化的程式還快, 可以在大寫 O 後面加上數字來指明想要的最佳化層級。 但是最佳化還是會有一些錯誤,舉例來說在 FreeBSD 2.10 release 中用 cc 且指定-O2時,在某些情形下會產生錯誤的執行檔。只有當要釋出發行版本、或者加速程式時,才需要使用最佳化選項。

這會產生 foobar 執行檔的最佳化版本。

以下三個參數將會強迫 cc 確認程式碼是否符合一些國際標準的規範, 也就是通常說的 ANSI 標準, 而 ANSI 嚴格來講屬 ISO 標準。

-Wall-

-Wall顯示 cc 維護者所認為值得注意的所有警告訊息。 不過這名字可能會造成誤解,事實上它並未完全顯示 cc 所能注意到的各項警告訊息。 -ansi-

-ansi關閉 cc 特有的某些特殊非 ANSI C 標準功能。 不過這名字可能會造成誤解,事實上它並不保證你的程式會完全符合 ANSI 標準。 -pedantic-

全面關閉 cc 所特有的非 ANSI C 標準功能。

除了這些參數,cc 還允許你使用一些額外的參數取代標準參數,有些額外參數非常有用, 但是實際上並不是所有的編譯器都有提供這些參數。 照標準來寫程式的最主要目的就是,希望你寫出來的程式可以在所有編譯器上編譯、執行無誤, 當程式可以達成上述目的時,就稱為 portable code(移植性良好的程式碼)。

一般來說,在撰寫程式時就應要注意『移植性』。 否則。當想把程式拿到另外一台機器上跑的時候,就可能得需要重寫程式。

上述指令會確認 foobar.c 內的語法是否符合標準, 並且產生名為 foobar 的執行檔。

-llibrary-

告訴 gcc 在連結(linking)程式時你需要用到的函式庫名稱。

最常見的情況就是,當你在程式中使用了 C 數學函式庫, 跟其他作業平台不一樣的是,這函示學函式都不在標準函式庫(library)中, 因此編譯器並不知道這函式庫名稱,你必須告訴編譯器要加上它才行。

規則很簡單,如果有個函式庫叫做 libsomething.a, 就必須在編譯時加上參數

-lsomething才行。 舉例來說,數學函式庫叫做 libm.a, 所以你必須給 cc 的參數就是-lm。 一般情況下,通常會把這參數必須放在指令的最後。上面這指令會讓 gcc 跟數學函式庫作連結,以便你的程式可以呼叫函式庫內含的數學函式。

如果你正在編譯的程式是 C++ 程式碼,你還必須額外指定

-lg++或者是-lstdc++。 如果你的 FreeBSD 是 2.2(含)以後版本, 你可以用指令 c++ 來取代 cc。 在 FreeBSD 上 c++ 也可以用 g++ 取代。% cc -o foobar foobar.cc -lg++ 適用 FreeBSD 2.1.6 或更早期的版本 % cc -o foobar foobar.cc -lstdc++ 適用 FreeBSD 2.2 及之後的版本 % c++ -o foobar foobar.cc上述指令都會從原始檔 foobar.cc 編譯產生名為 fooboar 的執行檔。 這邊要提醒的是在 UNIX 系統中 C++ 程式傳統都以 .C、 .cxx 或者是 .cc 作為副檔名, 而非 MS-DOS® 那種以 .cpp 作為副檔名的命名方式(不過也越來越普遍了)。 gcc 會依副檔名來決定用哪一種編譯器編譯, 然而,現在已經不再限制副檔名了, 所以可以自由的使用 .cpp 作為 C++ 程式碼的副檔名!

2.4.1 常見的 cc 問題

- 2.4.1.1. 我用

sin()函示撰寫我的程式, 但是有個錯誤訊息(如下),這代表著? - 2.4.1.2. 好吧,我試著寫些簡單的程式,來練習使用 -lm 選項(該程式會運算 2.1 的 6 次方)

- 2.4.1.3. 那如何才可以修正剛所說的問題?

- 2.4.1.4. 已經編譯好 foobar.c, 但是編譯後找不到 foobar 執行檔。 該去哪邊找呢?

- 2.4.1.5. 好,有個編譯好的程式叫做 foobar, 用 ls 指令時可以看到, 但執行時,訊息卻說卻沒有這檔案。為什麼?

- 2.4.1.6. 試著執行 test 執行檔, 但是卻沒有任何事發生,到底是哪裡出錯了?

- 2.4.1.7. 當執行我寫的程式時剛開始正常, 接下來卻出現 “core dumped” 錯誤訊息。這錯誤訊息到底代表什麼?

- 2.4.1.8. 真是太神奇了!程式居然發生 “core dumped” 了,該怎麼辦?

- 2.4.1.9. 當程式已經把 core memory 資料 dump 出來後, 同時也出現另一個錯誤 “segmentation fault” 這意思是?

- 2.4.1.10. Sometimes when I get a core dump it says “bus error”. It says in my UNIX book that this means a hardware problem, but the computer still seems to be working. Is this true?

- 2.4.1.11. This dumping core business sounds as though it could be quite useful, if I can make it happen when I want to. Can I do this, or do I have to wait until there is an error?

2.4.1.2. 好吧,我試著寫些簡單的程式,來練習使用 -lm 選項(該程式會運算 2.1 的 6 次方)

然後進行編譯:

編譯後執行程式,得到下面這結果:

很明顯的,程式結果不是正確答案,到底是哪邊出錯?

當編譯器發現你呼叫一個函示時,它會確認該函示的回傳值類型(prototype), 如果沒有特別指明,則預設的回傳值類型為 int(整數)。 很明顯的,你的程式所需要的並不是回傳值類別為 int。

關於 core dumped 這個名稱的由來, 可以追溯到早期的 UNIX 系統開始使用 core memory 對資料排序時。 基本上當程式在很多情況下發生錯誤後, 作業系統會把 core memory 中的資訊寫入 core 這檔案中, 以便讓 programmer 知道程式到底是為何出錯。

請用 gdb 來分析 core 結果(詳情請參考 Section 2.6)。

基本上,這個錯誤表示你的程式在記憶體中試著做一個嚴重的非法運作(illegal operation), UNIX 就是被設計來保護整個作業系統免於被惡質的程式破壞,所以才會告訴你這個訊息。

最常造成“segmentation fault”的原因通常為:

-

試著對一個

NULL的指標(pointer)作寫入的動作,如char *foo = NULL; strcpy(foo, "bang!"); -

使用一個尚未初始化(initialized)的指標,如:

char *foo; strcpy(foo, "bang!");尚未初始化的指標的初始值將會是隨機的,如果你夠幸運的話, 這個指標的初始值會指向 kernel 已經用到的記憶體位置, kernel 會結束掉這個程式以確保系統運作正常。如果你不夠幸運, 初始指到的記憶體位置是你程式必須要用到的資料結構(data structures)的位置, 當這個情形發生時程式將會當的不知其所以然。

-

試著寫入超過陣列(array)元素個數,如:

int bar[20]; bar[27] = 6; -

試著讀寫在唯讀記憶體(read-only memory)中的資料,如:

char *foo = "My string"; strcpy(foo, "bang!");UNIX compilers often put string literals like "My string" into read-only areas of memory.

-

Doing naughty things with

malloc()andfree(), egchar bar[80]; free(bar);or

char *foo = malloc(27); free(foo); free(foo);

Making one of these mistakes will not always lead to an error, but they are always bad practice. Some systems and compilers are more tolerant than others, which is why programs that ran well on one system can crash when you try them on an another.

2.4.1.10. Sometimes when I get a core dump it says “bus error”. It says in my UNIX book that this means a hardware problem, but the computer still seems to be working. Is this true?

No, fortunately not (unless of course you really do have a hardware problem...). This is usually another way of saying that you accessed memory in a way you should not have.

2.4.1.11. This dumping core business sounds as though it could be quite useful, if I can make it happen when I want to. Can I do this, or do I have to wait until there is an error?

Yes, just go to another console or xterm, do

% ps

to find out the process ID of your program, and do

% kill -ABRT pid

where pid is the

process ID you looked up.

This is useful if your program has got stuck in an infinite loop, for instance. If

your program happens to trap SIGABRT, there are several other

signals which have a similar effect.

Alternatively, you can create a core dump from inside your program, by calling the

abort() function. See the manual page of abort(3) to learn

more.

If you want to create a core dump from outside your program, but do not want the process to terminate, you can use the gcore program. See the manual page of gcore(1) for more information.

2.5 Make

2.5.1 What is make?

When you are working on a simple program with only one or two source files, typing in

% cc file1.c file2.c

is not too bad, but it quickly becomes very tedious when there are several files——and it can take a while to compile, too.

One way to get around this is to use object files and only recompile the source file if the source code has changed. So we could have something like:

% cc file1.o file2.o ... file37.c ...

if we had changed file37.c, but not any of the others, since the last time we compiled. This may speed up the compilation quite a bit, but does not solve the typing problem.

Or we could write a shell script to solve the typing problem, but it would have to re-compile everything, making it very inefficient on a large project.

What happens if we have hundreds of source files lying about? What if we are working in a team with other people who forget to tell us when they have changed one of their source files that we use?

Perhaps we could put the two solutions together and write something like a shell script that would contain some kind of magic rule saying when a source file needs compiling. Now all we need now is a program that can understand these rules, as it is a bit too complicated for the shell.

This program is called make. It reads in a file, called a makefile, that tells it how different files depend on each other, and works out which files need to be re-compiled and which ones do not. For example, a rule could say something like “if fromboz.o is older than fromboz.c, that means someone must have changed fromboz.c, so it needs to be re-compiled.” The makefile also has rules telling make how to re-compile the source file, making it a much more powerful tool.

Makefiles are typically kept in the same directory as the source they apply to, and can be called makefile, Makefile or MAKEFILE. Most programmers use the name Makefile, as this puts it near the top of a directory listing, where it can easily be seen. [6]

2.5.2 Example of using make

Here is a very simple make file:

foo: foo.c

cc -o foo foo.c

It consists of two lines, a dependency line and a creation line.

The dependency line here consists of the name of the program (known as the target), followed by a colon, then whitespace, then the name of the source file. When make reads this line, it looks to see if foo exists; if it exists, it compares the time foo was last modified to the time foo.c was last modified. If foo does not exist, or is older than foo.c, it then looks at the creation line to find out what to do. In other words, this is the rule for working out when foo.c needs to be re-compiled.

The creation line starts with a tab (press the tab key) and then the command you would type to create foo if you were doing it at a command prompt. If foo is out of date, or does not exist, make then executes this command to create it. In other words, this is the rule which tells make how to re-compile foo.c.

So, when you type make, it will make sure that foo is up to date with respect to your latest changes to foo.c. This principle can be extended to Makefiles with hundreds of targets——in fact, on FreeBSD, it is possible to compile the entire operating system just by typing make world in the appropriate directory!

Another useful property of makefiles is that the targets do not have to be programs. For instance, we could have a make file that looks like this:

foo: foo.c

cc -o foo foo.c

install:

cp foo /home/me

We can tell make which target we want to make by typing:

% make target

make will then only look at that target and ignore any others. For example, if we type make foo with the makefile above, make will ignore the install target.

If we just type make on its own, make will always look at the first target and then stop without looking at any others. So if we typed make here, it will just go to the foo target, re-compile foo if necessary, and then stop without going on to the install target.

Notice that the install target does not actually depend on anything! This means that the command on the following line is always executed when we try to make that target by typing make install. In this case, it will copy foo into the user's home directory. This is often used by application makefiles, so that the application can be installed in the correct directory when it has been correctly compiled.

This is a slightly confusing subject to try to explain. If you do not quite understand how make works, the best thing to do is to write a simple program like “hello world” and a make file like the one above and experiment. Then progress to using more than one source file, or having the source file include a header file. The touch command is very useful here——it changes the date on a file without you having to edit it.

2.5.3 Make and include-files

C code often starts with a list of files to include, for example stdio.h. Some of these files are system-include files, some of them are from the project you are now working on:

#include <stdio.h> #include "foo.h" int main(....

To make sure that this file is recompiled the moment foo.h is changed, you have to add it in your Makefile:

foo: foo.c foo.h

The moment your project is getting bigger and you have more and more own include-files

to maintain, it will be a pain to keep track of all include files and the files which are

depending on it. If you change an include-file but forget to recompile all the files

which are depending on it, the results will be devastating. gcc

has an option to analyze your files and to produce a list of include-files and their

dependencies: -MM.

If you add this to your Makefile:

depend:

gcc -E -MM *.c > .depend

and run make depend, the file .depend will appear with a list of object-files, C-files and the include-files:

foo.o: foo.c foo.h

If you change foo.h, next time you run make all files depending on foo.h will be recompiled.

Do not forget to run make depend each time you add an include-file to one of your files.

2.5.4 FreeBSD Makefiles

Makefiles can be rather complicated to write. Fortunately, BSD-based systems like FreeBSD come with some very powerful ones as part of the system. One very good example of this is the FreeBSD ports system. Here is the essential part of a typical ports Makefile:

MASTER_SITES= ftp://freefall.cdrom.com/pub/FreeBSD/LOCAL_PORTS/ DISTFILES= scheme-microcode+dist-7.3-freebsd.tgz .include <bsd.port.mk>

Now, if we go to the directory for this port and type make, the following happens:

-

A check is made to see if the source code for this port is already on the system.

-

If it is not, an FTP connection to the URL in

MASTER_SITESis set up to download the source. -

The checksum for the source is calculated and compared it with one for a known, good, copy of the source. This is to make sure that the source was not corrupted while in transit.

-

Any changes required to make the source work on FreeBSD are applied——this is known as patching.

-

Any special configuration needed for the source is done. (Many UNIX program distributions try to work out which version of UNIX they are being compiled on and which optional UNIX features are present——this is where they are given the information in the FreeBSD ports scenario).

-

The source code for the program is compiled. In effect, we change to the directory where the source was unpacked and do make——the program's own make file has the necessary information to build the program.

-

We now have a compiled version of the program. If we wish, we can test it now; when we feel confident about the program, we can type make install. This will cause the program and any supporting files it needs to be copied into the correct location; an entry is also made into a package database, so that the port can easily be uninstalled later if we change our mind about it.

Now I think you will agree that is rather impressive for a four line script!

The secret lies in the last line, which tells make to look in the system makefile called bsd.port.mk. It is easy to overlook this line, but this is where all the clever stuff comes from——someone has written a makefile that tells make to do all the things above (plus a couple of other things I did not mention, including handling any errors that may occur) and anyone can get access to that just by putting a single line in their own make file!

If you want to have a look at these system makefiles, they are in /usr/share/mk, but it is probably best to wait until you have had a bit of practice with makefiles, as they are very complicated (and if you do look at them, make sure you have a flask of strong coffee handy!)

2.5.5 More advanced uses of make

Make is a very powerful tool, and can do much more than the simple example above shows. Unfortunately, there are several different versions of make, and they all differ considerably. The best way to learn what they can do is probably to read the documentation——hopefully this introduction will have given you a base from which you can do this.

The version of make that comes with FreeBSD is the Berkeley make; there is a tutorial for it in /usr/share/doc/psd/12.make. To view it, do

% zmore paper.ascii.gz

in that directory.

Many applications in the ports use GNU make, which has a very good set of “info” pages. If you have installed any of these ports, GNU make will automatically have been installed as gmake. It is also available as a port and package in its own right.

To view the info pages for GNU make, you will have to edit the dir file in the /usr/local/info directory to add an entry for it. This involves adding a line like

* Make: (make). The GNU Make utility.

to the file. Once you have done this, you can type info and then select make from the menu (or in Emacs, do C-h i).

2.6 Debugging

2.6.1 The Debugger

The debugger that comes with FreeBSD is called gdb (GNU debugger). You start it up by typing

% gdb progname

although most people prefer to run it inside Emacs. You can do this by:

M-x gdb RET progname RET

Using a debugger allows you to run the program under more controlled circumstances. Typically, you can step through the program a line at a time, inspect the value of variables, change them, tell the debugger to run up to a certain point and then stop, and so on. You can even attach to a program that is already running, or load a core file to investigate why the program crashed. It is even possible to debug the kernel, though that is a little trickier than the user applications we will be discussing in this section.

gdb has quite good on-line help, as well as a set of info pages, so this section will concentrate on a few of the basic commands.

Finally, if you find its text-based command-prompt style off-putting, there is a graphical front-end for it (xxgdb) in the ports collection.

This section is intended to be an introduction to using gdb and does not cover specialized topics such as debugging the kernel.

2.6.2 Running a program in the debugger

You will need to have compiled the program with the -g

option to get the most out of using gdb. It will work without,

but you will only see the name of the function you are in, instead of the source code. If

you see a line like:

... (no debugging symbols found) ...

when gdb starts up, you will know that the program was not

compiled with the -g option.

At the gdb prompt, type break

main. This will tell the debugger to skip over the preliminary set-up code in the

program and start at the beginning of your code. Now type run to start the program——it will start at the beginning of the

set-up code and then get stopped by the debugger when it calls main(). (If you have ever wondered where main() gets called from, now you know!).

You can now step through the program, a line at a time, by pressing n. If you get to a function call, you can step into it by pressing s. Once you are in a function call, you can return from stepping into a function call by pressing f. You can also use up and down to take a quick look at the caller.

Here is a simple example of how to spot a mistake in a program with gdb. This is our program (with a deliberate mistake):

#include <stdio.h>

int bazz(int anint);

main() {

int i;

printf("This is my program\n");

bazz(i);

return 0;

}

int bazz(int anint) {

printf("You gave me %d\n", anint);

return anint;

}

This program sets i to be 5 and

passes it to a function bazz() which prints out the number

we gave it.

When we compile and run the program we get

% cc -g -o temp temp.c % ./temp This is my program anint = 4231

That was not what we expected! Time to see what is going on!

% gdb temp GDB is free software and you are welcome to distribute copies of it under certain conditions; type "show copying" to see the conditions. There is absolutely no warranty for GDB; type "show warranty" for details. GDB 4.13 (i386-unknown-freebsd), Copyright 1994 Free Software Foundation, Inc. (gdb) break main Skip the set-up code Breakpoint 1 at 0x160f: file temp.c, line 9. gdb puts breakpoint atmain()(gdb) run Run as far asmain()Starting program: /home/james/tmp/temp Program starts running Breakpoint 1, main () at temp.c:9 gdb stops atmain()(gdb) n Go to next line This is my program Program prints out (gdb) s step intobazz()bazz (anint=4231) at temp.c:17 gdb displays stack frame (gdb)

Hang on a minute! How did anint get to be 4231? Did we not we set it to be 5 in main()? Let's move up to main() and

have a look.

(gdb) up Move up call stack

#1 0x1625 in main () at temp.c:11 gdb displays stack frame

(gdb) p i Show us the value of i

$1 = 4231 gdb displays 4231

Oh dear! Looking at the code, we forgot to initialize i.

We meant to put

...

main() {

int i;

i = 5;

printf("This is my program\n");

...

but we left the i=5; line out. As we did not initialize i, it had whatever number happened to be in that area of memory

when the program ran, which in this case happened to be 4231.

Note: gdb displays the stack frame every time we go into or out of a function, even if we are using up and down to move around the call stack. This shows the name of the function and the values of its arguments, which helps us keep track of where we are and what is going on. (The stack is a storage area where the program stores information about the arguments passed to functions and where to go when it returns from a function call).

2.6.3 Examining a core file

A core file is basically a file which contains the complete state of the process when it crashed. In “the good old days”, programmers had to print out hex listings of core files and sweat over machine code manuals, but now life is a bit easier. Incidentally, under FreeBSD and other 4.4BSD systems, a core file is called progname.core instead of just core, to make it clearer which program a core file belongs to.

To examine a core file, start up gdb in the usual way. Instead of typing break or run, type

(gdb) core progname.core

If you are not in the same directory as the core file, you will have to do dir /path/to/core/file first.

You should see something like this:

% gdb a.out GDB is free software and you are welcome to distribute copies of it under certain conditions; type "show copying" to see the conditions. There is absolutely no warranty for GDB; type "show warranty" for details. GDB 4.13 (i386-unknown-freebsd), Copyright 1994 Free Software Foundation, Inc. (gdb) core a.out.core Core was generated by `a.out'. Program terminated with signal 11, Segmentation fault. Cannot access memory at address 0x7020796d. #0 0x164a in bazz (anint=0x5) at temp.c:17 (gdb)

In this case, the program was called a.out, so the core file

is called a.out.core. We can see that the program crashed due

to trying to access an area in memory that was not available to it in a function called

bazz.

Sometimes it is useful to be able to see how a function was called, as the problem could have occurred a long way up the call stack in a complex program. The bt command causes gdb to print out a back-trace of the call stack:

(gdb) bt #0 0x164a in bazz (anint=0x5) at temp.c:17 #1 0xefbfd888 in end () #2 0x162c in main () at temp.c:11 (gdb)

The end() function is called when a program crashes; in

this case, the bazz() function was called from main().

2.6.4 Attaching to a running program

One of the neatest features about gdb is that it can attach to a program that is already running. Of course, that assumes you have sufficient permissions to do so. A common problem is when you are stepping through a program that forks, and you want to trace the child, but the debugger will only let you trace the parent.

What you do is start up another gdb, use ps to find the process ID for the child, and do

(gdb) attach pid

in gdb, and then debug as usual.

“That is all very well,” you are probably thinking, “but by the time I have done that, the child process will be over the hill and far away”. Fear not, gentle reader, here is how to do it (courtesy of the gdb info pages):

...

if ((pid = fork()) < 0) /* _Always_ check this */

error();

else if (pid == 0) { /* child */

int PauseMode = 1;

while (PauseMode)

sleep(10); /* Wait until someone attaches to us */

...

} else { /* parent */

...

Now all you have to do is attach to the child, set PauseMode to 0, and wait for the sleep() call to return!

2.7 Using Emacs as a Development Environment

2.7.1 Emacs

Unfortunately, UNIX systems do not come with the kind of everything-you-ever-wanted-and-lots-more-you-did-not-in-one-gigantic-package integrated development environments that other systems have. [7] However, it is possible to set up your own environment. It may not be as pretty, and it may not be quite as integrated, but you can set it up the way you want it. And it is free. And you have the source to it.

The key to it all is Emacs. Now there are some people who loathe it, but many who love it. If you are one of the former, I am afraid this section will hold little of interest to you. Also, you will need a fair amount of memory to run it——I would recommend 8MB in text mode and 16MB in X as the bare minimum to get reasonable performance.

Emacs is basically a highly customizable editor——indeed, it has been customized to the point where it is more like an operating system than an editor! Many developers and sysadmins do in fact spend practically all their time working inside Emacs, leaving it only to log out.

It is impossible even to summarize everything Emacs can do here, but here are some of the features of interest to developers:

-

Very powerful editor, allowing search-and-replace on both strings and regular expressions (patterns), jumping to start/end of block expression, etc, etc.

-

Pull-down menus and online help.

-

Language-dependent syntax highlighting and indentation.

-

Completely customizable.

-

You can compile and debug programs within Emacs.

-

On a compilation error, you can jump to the offending line of source code.

-

Friendly-ish front-end to the info program used for reading GNU hypertext documentation, including the documentation on Emacs itself.

-

Friendly front-end to gdb, allowing you to look at the source code as you step through your program.

-

You can read Usenet news and mail while your program is compiling.

And doubtless many more that I have overlooked.

Emacs can be installed on FreeBSD using the Emacs port.

Once it is installed, start it up and do C-h t to read an Emacs tutorial——that means hold down the control key, press h, let go of the control key, and then press t. (Alternatively, you can you use the mouse to select Emacs Tutorial from the Help menu).

Although Emacs does have menus, it is well worth learning the key bindings, as it is much quicker when you are editing something to press a couple of keys than to try to find the mouse and then click on the right place. And, when you are talking to seasoned Emacs users, you will find they often casually throw around expressions like “M-x replace-s RET foo RET bar RET” so it is useful to know what they mean. And in any case, Emacs has far too many useful functions for them to all fit on the menu bars.

Fortunately, it is quite easy to pick up the key-bindings, as they are displayed next to the menu item. My advice is to use the menu item for, say, opening a file until you understand how it works and feel confident with it, then try doing C-x C-f. When you are happy with that, move on to another menu command.

If you can not remember what a particular combination of keys does, select Describe Key from the Help menu and type it in——Emacs will tell you what it does. You can also use the Command Apropos menu item to find out all the commands which contain a particular word in them, with the key binding next to it.

By the way, the expression above means hold down the Meta key, press x, release the Meta key, type replace-s (short for replace-string——another feature of Emacs is that you can abbreviate commands), press the return key, type foo (the string you want replaced), press the return key, type bar (the string you want to replace foo with) and press return again. Emacs will then do the search-and-replace operation you have just requested.

If you are wondering what on earth the Meta key is, it is a special key that many UNIX workstations have. Unfortunately, PC's do not have one, so it is usually the alt key (or if you are unlucky, the escape key).

Oh, and to get out of Emacs, do C-x C-c (that means hold down the control key, press x, press c and release the control key). If you have any unsaved files open, Emacs will ask you if you want to save them. (Ignore the bit in the documentation where it says C-z is the usual way to leave Emacs——that leaves Emacs hanging around in the background, and is only really useful if you are on a system which does not have virtual terminals).

2.7.2 Configuring Emacs

Emacs does many wonderful things; some of them are built in, some of them need to be configured.

Instead of using a proprietary macro language for configuration, Emacs uses a version of Lisp specially adapted for editors, known as Emacs Lisp. Working with Emacs Lisp can be quite helpful if you want to go on and learn something like Common Lisp. Emacs Lisp has many features of Common Lisp, although it is considerably smaller (and thus easier to master).

The best way to learn Emacs Lisp is to download the Emacs Tutorial

However, there is no need to actually know any Lisp to get started with configuring Emacs, as I have included a sample .emacs file, which should be enough to get you started. Just copy it into your home directory and restart Emacs if it is already running; it will read the commands from the file and (hopefully) give you a useful basic setup.

2.7.3 A sample .emacs file

Unfortunately, there is far too much here to explain it in detail; however there are one or two points worth mentioning.

-

Everything beginning with a ; is a comment and is ignored by Emacs.

-

In the first line, the -*- Emacs-Lisp -*- is so that we can edit the .emacs file itself within Emacs and get all the fancy features for editing Emacs Lisp. Emacs usually tries to guess this based on the filename, and may not get it right for .emacs.

-

The tab key is bound to an indentation function in some modes, so when you press the tab key, it will indent the current line of code. If you want to put a tab character in whatever you are writing, hold the control key down while you are pressing the tab key.

-

This file supports syntax highlighting for C, C++, Perl, Lisp and Scheme, by guessing the language from the filename.

-

Emacs already has a pre-defined function called

next-error. In a compilation output window, this allows you to move from one compilation error to the next by doing M-n; we define a complementary function,previous-error, that allows you to go to a previous error by doing M-p. The nicest feature of all is that C-c C-c will open up the source file in which the error occurred and jump to the appropriate line. -

We enable Emacs's ability to act as a server, so that if you are doing something outside Emacs and you want to edit a file, you can just type in

% emacsclient filenameand then you can edit the file in your Emacs! [8]

Example 2-1. A sample .emacs file

;; -*-Emacs-Lisp-*-

;; This file is designed to be re-evaled; use the variable first-time

;; to avoid any problems with this.

(defvar first-time t

"Flag signifying this is the first time that .emacs has been evaled")

;; Meta

(global-set-key "\M- " 'set-mark-command)

(global-set-key "\M-\C-h" 'backward-kill-word)

(global-set-key "\M-\C-r" 'query-replace)

(global-set-key "\M-r" 'replace-string)

(global-set-key "\M-g" 'goto-line)

(global-set-key "\M-h" 'help-command)

;; Function keys

(global-set-key [f1] 'manual-entry)

(global-set-key [f2] 'info)

(global-set-key [f3] 'repeat-complex-command)

(global-set-key [f4] 'advertised-undo)

(global-set-key [f5] 'eval-current-buffer)

(global-set-key [f6] 'buffer-menu)

(global-set-key [f7] 'other-window)

(global-set-key [f8] 'find-file)

(global-set-key [f9] 'save-buffer)

(global-set-key [f10] 'next-error)

(global-set-key [f11] 'compile)

(global-set-key [f12] 'grep)

(global-set-key [C-f1] 'compile)

(global-set-key [C-f2] 'grep)

(global-set-key [C-f3] 'next-error)

(global-set-key [C-f4] 'previous-error)

(global-set-key [C-f5] 'display-faces)

(global-set-key [C-f8] 'dired)

(global-set-key [C-f10] 'kill-compilation)

;; Keypad bindings

(global-set-key [up] "\C-p")

(global-set-key [down] "\C-n")

(global-set-key [left] "\C-b")

(global-set-key [right] "\C-f")

(global-set-key [home] "\C-a")

(global-set-key [end] "\C-e")

(global-set-key [prior] "\M-v")

(global-set-key [next] "\C-v")

(global-set-key [C-up] "\M-\C-b")

(global-set-key [C-down] "\M-\C-f")

(global-set-key [C-left] "\M-b")

(global-set-key [C-right] "\M-f")

(global-set-key [C-home] "\M-<")

(global-set-key [C-end] "\M->")

(global-set-key [C-prior] "\M-<")

(global-set-key [C-next] "\M->")

;; Mouse

(global-set-key [mouse-3] 'imenu)

;; Misc

(global-set-key [C-tab] "\C-q\t") ; Control tab quotes a tab.

(setq backup-by-copying-when-mismatch t)

;; Treat 'y' or <CR> as yes, 'n' as no.

(fset 'yes-or-no-p 'y-or-n-p)

(define-key query-replace-map [return] 'act)

(define-key query-replace-map [?\C-m] 'act)

;; Load packages

(require 'desktop)

(require 'tar-mode)

;; Pretty diff mode

(autoload 'ediff-buffers "ediff" "Intelligent Emacs interface to diff" t)

(autoload 'ediff-files "ediff" "Intelligent Emacs interface to diff" t)

(autoload 'ediff-files-remote "ediff"

"Intelligent Emacs interface to diff")

(if first-time

(setq auto-mode-alist

(append '(("\\.cpp$" . c++-mode)

("\\.hpp$" . c++-mode)

("\\.lsp$" . lisp-mode)

("\\.scm$" . scheme-mode)

("\\.pl$" . perl-mode)

) auto-mode-alist)))

;; Auto font lock mode

(defvar font-lock-auto-mode-list

(list 'c-mode 'c++-mode 'c++-c-mode 'emacs-lisp-mode 'lisp-mode 'perl-mode 'scheme-mode)

"List of modes to always start in font-lock-mode")

(defvar font-lock-mode-keyword-alist

'((c++-c-mode . c-font-lock-keywords)

(perl-mode . perl-font-lock-keywords))

"Associations between modes and keywords")

(defun font-lock-auto-mode-select ()

"Automatically select font-lock-mode if the current major mode is in font-lock-auto-mode-list"

(if (memq major-mode font-lock-auto-mode-list)

(progn

(font-lock-mode t))

)

)

(global-set-key [M-f1] 'font-lock-fontify-buffer)

;; New dabbrev stuff

;(require 'new-dabbrev)

(setq dabbrev-always-check-other-buffers t)

(setq dabbrev-abbrev-char-regexp "\\sw\\|\\s_")

(add-hook 'emacs-lisp-mode-hook

'(lambda ()

(set (make-local-variable 'dabbrev-case-fold-search) nil)

(set (make-local-variable 'dabbrev-case-replace) nil)))

(add-hook 'c-mode-hook

'(lambda ()

(set (make-local-variable 'dabbrev-case-fold-search) nil)

(set (make-local-variable 'dabbrev-case-replace) nil)))

(add-hook 'text-mode-hook

'(lambda ()

(set (make-local-variable 'dabbrev-case-fold-search) t)

(set (make-local-variable 'dabbrev-case-replace) t)))

;; C++ and C mode...

(defun my-c++-mode-hook ()

(setq tab-width 4)

(define-key c++-mode-map "\C-m" 'reindent-then-newline-and-indent)

(define-key c++-mode-map "\C-ce" 'c-comment-edit)

(setq c++-auto-hungry-initial-state 'none)

(setq c++-delete-function 'backward-delete-char)

(setq c++-tab-always-indent t)

(setq c-indent-level 4)

(setq c-continued-statement-offset 4)

(setq c++-empty-arglist-indent 4))

(defun my-c-mode-hook ()

(setq tab-width 4)

(define-key c-mode-map "\C-m" 'reindent-then-newline-and-indent)

(define-key c-mode-map "\C-ce" 'c-comment-edit)

(setq c-auto-hungry-initial-state 'none)

(setq c-delete-function 'backward-delete-char)

(setq c-tab-always-indent t)

;; BSD-ish indentation style

(setq c-indent-level 4)

(setq c-continued-statement-offset 4)

(setq c-brace-offset -4)

(setq c-argdecl-indent 0)

(setq c-label-offset -4))

;; Perl mode

(defun my-perl-mode-hook ()

(setq tab-width 4)

(define-key c++-mode-map "\C-m" 'reindent-then-newline-and-indent)

(setq perl-indent-level 4)

(setq perl-continued-statement-offset 4))

;; Scheme mode...

(defun my-scheme-mode-hook ()

(define-key scheme-mode-map "\C-m" 'reindent-then-newline-and-indent))

;; Emacs-Lisp mode...

(defun my-lisp-mode-hook ()

(define-key lisp-mode-map "\C-m" 'reindent-then-newline-and-indent)

(define-key lisp-mode-map "\C-i" 'lisp-indent-line)

(define-key lisp-mode-map "\C-j" 'eval-print-last-sexp))

;; Add all of the hooks...

(add-hook 'c++-mode-hook 'my-c++-mode-hook)

(add-hook 'c-mode-hook 'my-c-mode-hook)

(add-hook 'scheme-mode-hook 'my-scheme-mode-hook)

(add-hook 'emacs-lisp-mode-hook 'my-lisp-mode-hook)

(add-hook 'lisp-mode-hook 'my-lisp-mode-hook)

(add-hook 'perl-mode-hook 'my-perl-mode-hook)

;; Complement to next-error

(defun previous-error (n)

"Visit previous compilation error message and corresponding source code."

(interactive "p")

(next-error (- n)))

;; Misc...

(transient-mark-mode 1)

(setq mark-even-if-inactive t)

(setq visible-bell nil)

(setq next-line-add-newlines nil)

(setq compile-command "make")

(setq suggest-key-bindings nil)

(put 'eval-expression 'disabled nil)

(put 'narrow-to-region 'disabled nil)

(put 'set-goal-column 'disabled nil)

(if (>= emacs-major-version 21)

(setq show-trailing-whitespace t))

;; Elisp archive searching

(autoload 'format-lisp-code-directory "lispdir" nil t)

(autoload 'lisp-dir-apropos "lispdir" nil t)

(autoload 'lisp-dir-retrieve "lispdir" nil t)

(autoload 'lisp-dir-verify "lispdir" nil t)

;; Font lock mode

(defun my-make-face (face color &optional bold)

"Create a face from a color and optionally make it bold"

(make-face face)

(copy-face 'default face)

(set-face-foreground face color)

(if bold (make-face-bold face))

)

(if (eq window-system 'x)

(progn

(my-make-face 'blue "blue")

(my-make-face 'red "red")

(my-make-face 'green "dark green")

(setq font-lock-comment-face 'blue)

(setq font-lock-string-face 'bold)

(setq font-lock-type-face 'bold)

(setq font-lock-keyword-face 'bold)

(setq font-lock-function-name-face 'red)

(setq font-lock-doc-string-face 'green)

(add-hook 'find-file-hooks 'font-lock-auto-mode-select)

(setq baud-rate 1000000)

(global-set-key "\C-cmm" 'menu-bar-mode)

(global-set-key "\C-cms" 'scroll-bar-mode)

(global-set-key [backspace] 'backward-delete-char)

; (global-set-key [delete] 'delete-char)

(standard-display-european t)

(load-library "iso-transl")))

;; X11 or PC using direct screen writes

(if window-system

(progn

;; (global-set-key [M-f1] 'hilit-repaint-command)

;; (global-set-key [M-f2] [?\C-u M-f1])

(setq hilit-mode-enable-list

'(not text-mode c-mode c++-mode emacs-lisp-mode lisp-mode

scheme-mode)

hilit-auto-highlight nil

hilit-auto-rehighlight 'visible

hilit-inhibit-hooks nil

hilit-inhibit-rebinding t)

(require 'hilit19)

(require 'paren))

(setq baud-rate 2400) ; For slow serial connections

)

;; TTY type terminal

(if (and (not window-system)

(not (equal system-type 'ms-dos)))

(progn

(if first-time

(progn

(keyboard-translate ?\C-h ?\C-?)

(keyboard-translate ?\C-? ?\C-h)))))

;; Under UNIX

(if (not (equal system-type 'ms-dos))

(progn

(if first-time

(server-start))))

;; Add any face changes here

(add-hook 'term-setup-hook 'my-term-setup-hook)

(defun my-term-setup-hook ()

(if (eq window-system 'pc)

(progn

;; (set-face-background 'default "red")

)))

;; Restore the "desktop" - do this as late as possible

(if first-time

(progn

(desktop-load-default)

(desktop-read)))

;; Indicate that this file has been read at least once

(setq first-time nil)

;; No need to debug anything now

(setq debug-on-error nil)

;; All done

(message "All done, %s%s" (user-login-name) ".")

2.7.4 Extending the Range of Languages Emacs Understands

Now, this is all very well if you only want to program in the languages already catered for in the .emacs file (C, C++, Perl, Lisp and Scheme), but what happens if a new language called “whizbang” comes out, full of exciting features?

The first thing to do is find out if whizbang comes with any files that tell Emacs about the language. These usually end in .el, short for “Emacs Lisp”. For example, if whizbang is a FreeBSD port, we can locate these files by doing

% find /usr/ports/lang/whizbang -name "*.el" -print

and install them by copying them into the Emacs site Lisp directory. On FreeBSD 2.1.0-RELEASE, this is /usr/local/share/emacs/site-lisp.

So for example, if the output from the find command was

/usr/ports/lang/whizbang/work/misc/whizbang.el

we would do

# cp /usr/ports/lang/whizbang/work/misc/whizbang.el /usr/local/share/emacs/site-lisp

Next, we need to decide what extension whizbang source files have. Let's say for the sake of argument that they all end in .wiz. We need to add an entry to our .emacs file to make sure Emacs will be able to use the information in whizbang.el.

Find the auto-mode-alist entry in .emacs and add a line for whizbang, such as:

...

("\\.lsp$" . lisp-mode)

("\\.wiz$" . whizbang-mode)

("\\.scm$" . scheme-mode)

...

This means that Emacs will automatically go into whizbang-mode when you edit a file ending in .wiz.

Just below this, you will find the font-lock-auto-mode-list entry. Add whizbang-mode to it like so:

;; Auto font lock mode (defvar font-lock-auto-mode-list (list 'c-mode 'c++-mode 'c++-c-mode 'emacs-lisp-mode 'whizbang-mode 'lisp-mode 'perl-mode 'scheme-mode) "List of modes to always start in font-lock-mode")

This means that Emacs will always enable font-lock-mode

(ie syntax highlighting) when editing a .wiz file.

And that is all that is needed. If there is anything else you want done automatically

when you open up a .wiz file, you can add a whizbang-mode hook (see my-scheme-mode-hook for a simple example that adds auto-indent).

2.8 Further Reading

For information about setting up a development environment for contributing fixes to FreeBSD itself, please see development(7).

-

Brian Harvey and Matthew Wright Simply Scheme MIT 1994. ISBN 0-262-08226-8

-

Randall Schwartz Learning Perl O'Reilly 1993 ISBN 1-56592-042-2

-

Patrick Henry Winston and Berthold Klaus Paul Horn Lisp (3rd Edition) Addison-Wesley 1989 ISBN 0-201-08319-1

-

Brian W. Kernighan and Rob Pike The Unix Programming Environment Prentice-Hall 1984 ISBN 0-13-937681-X

-

Brian W. Kernighan and Dennis M. Ritchie The C Programming Language (2nd Edition) Prentice-Hall 1988 ISBN 0-13-110362-8

-

Bjarne Stroustrup The C++ Programming Language Addison-Wesley 1991 ISBN 0-201-53992-6

-

W. Richard Stevens Advanced Programming in the Unix Environment Addison-Wesley 1992 ISBN 0-201-56317-7

-

W. Richard Stevens Unix Network Programming Prentice-Hall 1990 ISBN 0-13-949876-1

Chapter 3 Secure Programming

Contributed by Murray Stokely.3.1 Synopsis

This chapter describes some of the security issues that have plagued UNIX programmers for decades and some of the new tools available to help programmers avoid writing exploitable code.

3.2 Secure Design Methodology

Writing secure applications takes a very scrutinous and pessimistic outlook on life. Applications should be run with the principle of “least privilege” so that no process is ever running with more than the bare minimum access that it needs to accomplish its function. Previously tested code should be reused whenever possible to avoid common mistakes that others may have already fixed.

One of the pitfalls of the UNIX environment is how easy it is to make assumptions about the sanity of the environment. Applications should never trust user input (in all its forms), system resources, inter-process communication, or the timing of events. UNIX processes do not execute synchronously so logical operations are rarely atomic.

3.3 Buffer Overflows

Buffer Overflows have been around since the very beginnings of the Von-Neuman 1 architecture. They first gained widespread notoriety in 1988 with the Morris Internet worm. Unfortunately, the same basic attack remains effective today. Of the 17 CERT security advisories of 1999, 10 of them were directly caused by buffer-overflow software bugs. By far the most common type of buffer overflow attack is based on corrupting the stack.

Most modern computer systems use a stack to pass arguments to procedures and to store local variables. A stack is a last in first out (LIFO) buffer in the high memory area of a process image. When a program invokes a function a new "stack frame" is created. This stack frame consists of the arguments passed to the function as well as a dynamic amount of local variable space. The "stack pointer" is a register that holds the current location of the top of the stack. Since this value is constantly changing as new values are pushed onto the top of the stack, many implementations also provide a "frame pointer" that is located near the beginning of a stack frame so that local variables can more easily be addressed relative to this value. 1 The return address for function calls is also stored on the stack, and this is the cause of stack-overflow exploits since overflowing a local variable in a function can overwrite the return address of that function, potentially allowing a malicious user to execute any code he or she wants.

Although stack-based attacks are by far the most common, it would also be possible to overrun the stack with a heap-based (malloc/free) attack.

The C programming language does not perform automatic bounds checking on arrays or pointers as many other languages do. In addition, the standard C library is filled with a handful of very dangerous functions.

strcpy(char *dest, const char *src) |

May overflow the dest buffer |

strcat(char *dest, const char *src) |

May overflow the dest buffer |

getwd(char *buf) |

May overflow the buf buffer |

gets(char *s) |

May overflow the s buffer |

[vf]scanf(const char *format, ...) |

May overflow its arguments. |

realpath(char *path, char resolved_path[]) |

May overflow the path buffer |

[v]sprintf(char *str, const char *format, ...) |

May overflow the str buffer. |

3.3.1 Example Buffer Overflow

The following example code contains a buffer overflow designed to overwrite the return address and skip the instruction immediately following the function call. (Inspired by 4)

#include <stdio.h>

void manipulate(char *buffer) {

char newbuffer[80];

strcpy(newbuffer,buffer);

}

int main() {

char ch,buffer[4096];

int i=0;

while ((buffer[i++] = getchar()) != '\n') {};

i=1;

manipulate(buffer);

i=2;

printf("The value of i is : %d\n",i);

return 0;

}

Let us examine what the memory image of this process would look like if we were to input 160 spaces into our little program before hitting return.

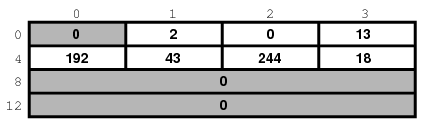

[XXX figure here!]

Obviously more malicious input can be devised to execute actual compiled instructions (such as exec(/bin/sh)).

3.3.2 Avoiding Buffer Overflows

The most straightforward solution to the problem of stack-overflows is to always use

length restricted memory and string copy functions. strncpy

and strncat are part of the standard C library. These

functions accept a length value as a parameter which should be no larger than the size of

the destination buffer. These functions will then copy up to `length' bytes from the

source to the destination. However there are a number of problems with these functions.

Neither function guarantees NUL termination if the size of the input buffer is as large

as the destination. The length parameter is also used inconsistently between strncpy and

strncat so it is easy for programmers to get confused as to their proper usage. There is

also a significant performance loss compared to strcpy when

copying a short string into a large buffer since strncpy

NUL fills up the size specified.

In OpenBSD, another memory copy implementation has been created to get around these

problem. The strlcpy and strlcat functions guarantee that they will always null terminate

the destination string when given a non-zero length argument. For more information about

these functions see 6. The OpenBSD strlcpy and strlcat instructions

have been in FreeBSD since 3.3.

3.3.2.1 Compiler based run-time bounds checking

Unfortunately there is still a very large assortment of code in public use which blindly copies memory around without using any of the bounded copy routines we just discussed. Fortunately, there is another solution. Several compiler add-ons and libraries exist to do Run-time bounds checking in C/C++.

StackGuard is one such add-on that is implemented as a small patch to the gcc code generator. From the StackGuard website:

"StackGuard detects and defeats stack smashing attacks by protecting the return address on the stack from being altered. StackGuard places a "canary" word next to the return address when a function is called. If the canary word has been altered when the function returns, then a stack smashing attack has been attempted, and the program responds by emitting an intruder alert into syslog, and then halts."

"StackGuard is implemented as a small patch to the gcc code generator, specifically the function_prolog() and function_epilog() routines. function_prolog() has been enhanced to lay down canaries on the stack when functions start, and function_epilog() checks canary integrity when the function exits. Any attempt at corrupting the return address is thus detected before the function returns."

Recompiling your application with StackGuard is an effective means of stopping most buffer-overflow attacks, but it can still be compromised.

3.3.2.2 Library based run-time bounds checking

Compiler-based mechanisms are completely useless for binary-only software for which

you cannot recompile. For these situations there are a number of libraries which

re-implement the unsafe functions of the C-library (strcpy,

fscanf, getwd, etc..) and

ensure that these functions can never write past the stack pointer.

-

libsafe

-

libverify

-

libparanoia

Unfortunately these library-based defenses have a number of shortcomings. These libraries only protect against a very small set of security related issues and they neglect to fix the actual problem. These defenses may fail if the application was compiled with -fomit-frame-pointer. Also, the LD_PRELOAD and LD_LIBRARY_PATH environment variables can be overwritten/unset by the user.

3.4 SetUID issues

There are at least 6 different IDs associated with any given process. Because of this you have to be very careful with the access that your process has at any given time. In particular, all seteuid applications should give up their privileges as soon as it is no longer required.

The real user ID can only be changed by a superuser process. The login program sets this when a user initially logs in and it is seldom changed.

The effective user ID is set by the exec() functions if

a program has its seteuid bit set. An application can call seteuid() at any time to set the effective user ID to either the

real user ID or the saved set-user-ID. When the effective user ID is set by exec() functions, the previous value is saved in the saved

set-user-ID.

3.5 Limiting your program's environment

The traditional method of restricting a process is with the chroot() system call. This system call changes the root directory

from which all other paths are referenced for a process and any child processes. For this

call to succeed the process must have execute (search) permission on the directory being

referenced. The new environment does not actually take effect until you chdir() into your new environment. It should also be noted that a

process can easily break out of a chroot environment if it has root privilege. This could

be accomplished by creating device nodes to read kernel memory, attaching a debugger to a

process outside of the jail, or in many other creative ways.

The behavior of the chroot() system call can be

controlled somewhat with the kern.chroot_allow_open_directories sysctl variable. When this value is set to 0, chroot() will fail with EPERM if there are any directories open.

If set to the default value of 1, then chroot() will fail

with EPERM if there are any directories open and the process is already subject to a

chroot() call. For any other value, the check for open

directories will be bypassed completely.

3.5.1 FreeBSD's jail functionality

The concept of a Jail extends upon the chroot() by

limiting the powers of the superuser to create a true `virtual server'. Once a prison is

set up all network communication must take place through the specified IP address, and

the power of "root privilege" in this jail is severely constrained.

While in a prison, any tests of superuser power within the kernel using the suser() call will fail. However, some calls to suser() have been changed to a new interface suser_xxx(). This function is responsible for recognizing or

denying access to superuser power for imprisoned processes.

A superuser process within a jailed environment has the power to:

-

Manipulate credential with

setuid,seteuid,setgid,setegid,setgroups,setreuid,setregid,setlogin -

Set resource limits with

setrlimit -

Modify some sysctl nodes (kern.hostname)

-

chroot() -

Set flags on a vnode:

chflags,fchflags -

Set attributes of a vnode such as file permission, owner, group, size, access time, and modification time.

-

Bind to privileged ports in the Internet domain (ports < 1024)

Jail is a very useful tool for running applications in a

secure environment but it does have some shortcomings. Currently, the IPC mechanisms have

not been converted to the suser_xxx so applications such as

MySQL cannot be run within a jail. Superuser access may have a very limited meaning

within a jail, but there is no way to specify exactly what "very limited" means.

3.5.2 POSIX®.1e Process Capabilities

POSIX has released a working draft that adds event auditing, access control lists, fine grained privileges, information labeling, and mandatory access control.

This is a work in progress and is the focus of the TrustedBSD project. Some of the initial work has been committed to FreeBSD-CURRENT (cap_set_proc(3)).

3.6 Trust

An application should never assume that anything about the users environment is sane. This includes (but is certainly not limited to): user input, signals, environment variables, resources, IPC, mmaps, the filesystem working directory, file descriptors, the # of open files, etc.

You should never assume that you can catch all forms of invalid input that a user might supply. Instead, your application should use positive filtering to only allow a specific subset of inputs that you deem safe. Improper data validation has been the cause of many exploits, especially with CGI scripts on the world wide web. For filenames you need to be extra careful about paths ("../", "/"), symbolic links, and shell escape characters.

Perl has a really cool feature called "Taint" mode which can be used to prevent

scripts from using data derived outside the program in an unsafe way. This mode will

check command line arguments, environment variables, locale information, the results of

certain syscalls (readdir(), readlink(), getpwxxx(), and all

file input.

3.7 Race Conditions

A race condition is anomalous behavior caused by the unexpected dependence on the relative timing of events. In other words, a programmer incorrectly assumed that a particular event would always happen before another.

Some of the common causes of race conditions are signals, access checks, and file

opens. Signals are asynchronous events by nature so special care must be taken in dealing

with them. Checking access with access(2) then open(2) is clearly non-atomic. Users can move files in between

the two calls. Instead, privileged applications should seteuid() and then call open()

directly. Along the same lines, an application should always set a proper umask before

open() to obviate the need for spurious chmod() calls.

Chapter 4 Localization and Internationalization - L10N and I18N

4.1 Programming I18N Compliant Applications

To make your application more useful for speakers of other languages, we hope that you will program I18N compliant. The GNU gcc compiler and GUI libraries like QT and GTK support I18N through special handling of strings. Making a program I18N compliant is very easy. It allows contributors to port your application to other languages quickly. Refer to the library specific I18N documentation for more details.

In contrast with common perception, I18N compliant code is easy to write. Usually, it only involves wrapping your strings with library specific functions. In addition, please be sure to allow for wide or multibyte character support.

4.1.1 A Call to Unify the I18N Effort

It has come to our attention that the individual I18N/L10N efforts for each country has been repeating each others' efforts. Many of us have been reinventing the wheel repeatedly and inefficiently. We hope that the various major groups in I18N could congregate into a group effort similar to the Core Team's responsibility.

Currently, we hope that, when you write or port I18N programs, you would send it out to each country's related FreeBSD mailing list for testing. In the future, we hope to create applications that work in all the languages out-of-the-box without dirty hacks.

The FreeBSD internationalization 郵遞論壇 has been established. If you are an I18N/L10N developer, please send your comments, ideas, questions, and anything you deem related to it.

4.1.2 Perl and Python

Perl and Python have I18N and wide character handling libraries. Please use them for I18N compliance.

In older FreeBSD versions, Perl may give warnings about not having a wide character locale installed on your system. You can set the environment variable LD_PRELOAD to /usr/lib/libxpg4.so in your shell.

In sh-based shells:

LD_PRELOAD=/usr/lib/libxpg4.so

In C-based shells:

setenv LD_PRELOAD /usr/lib/libxpg4.so

Chapter 5 Source Tree Guidelines and Policies

Contributed by Poul-Henning Kamp.This chapter documents various guidelines and policies in force for the FreeBSD source tree.

5.1 MAINTAINER on Makefiles

If a particular portion of the FreeBSD distribution is being maintained by a person or group of persons, they can communicate this fact to the world by adding a

MAINTAINER= email-addressesline to the Makefiles covering this portion of the source tree.

The semantics of this are as follows:

The maintainer owns and is responsible for that code. This means that he is responsible for fixing bugs and answering problem reports pertaining to that piece of the code, and in the case of contributed software, for tracking new versions, as appropriate.

Changes to directories which have a maintainer defined shall be sent to the maintainer for review before being committed. Only if the maintainer does not respond for an unacceptable period of time, to several emails, will it be acceptable to commit changes without review by the maintainer. However, it is suggested that you try to have the changes reviewed by someone else if at all possible.

It is of course not acceptable to add a person or group as maintainer unless they agree to assume this duty. On the other hand it does not have to be a committer and it can easily be a group of people.

5.2 Contributed Software

Contributed by Poul-Henning Kamp and David O'Brien.Some parts of the FreeBSD distribution consist of software that is actively being maintained outside the FreeBSD project. For historical reasons, we call this contributed software. Some examples are sendmail, gcc and patch.

Over the last couple of years, various methods have been used in dealing with this type of software and all have some number of advantages and drawbacks. No clear winner has emerged.

Since this is the case, after some debate one of these methods has been selected as the “official” method and will be required for future imports of software of this kind. Furthermore, it is strongly suggested that existing contributed software converge on this model over time, as it has significant advantages over the old method, including the ability to easily obtain diffs relative to the “official” versions of the source by everyone (even without cvs access). This will make it significantly easier to return changes to the primary developers of the contributed software.

Ultimately, however, it comes down to the people actually doing the work. If using this model is particularly unsuited to the package being dealt with, exceptions to these rules may be granted only with the approval of the core team and with the general consensus of the other developers. The ability to maintain the package in the future will be a key issue in the decisions.

Note: Because of some unfortunate design limitations with the RCS file format and CVS's use of vendor branches, minor, trivial and/or cosmetic changes are strongly discouraged on files that are still tracking the vendor branch. “Spelling fixes” are explicitly included here under the “cosmetic” category and are to be avoided for files with revision 1.1.x.x. The repository bloat impact from a single character change can be rather dramatic.

The Tcl embedded programming language will be used as example of how this model works:

src/contrib/tcl contains the source as distributed by the maintainers of this package. Parts that are entirely not applicable for FreeBSD can be removed. In the case of Tcl, the mac, win and compat subdirectories were eliminated before the import.

src/lib/libtcl contains only a bmake style Makefile that uses the standard bsd.lib.mk makefile rules to produce the library and install the documentation.

src/usr.bin/tclsh contains only a bmake style Makefile which will produce and install the tclsh program and its associated man-pages using the standard bsd.prog.mk rules.

src/tools/tools/tcl_bmake contains a couple of shell-scripts that can be of help when the tcl software needs updating. These are not part of the built or installed software.

The important thing here is that the src/contrib/tcl directory is created according to the rules: it is supposed to contain the sources as distributed (on a proper CVS vendor-branch and without RCS keyword expansion) with as few FreeBSD-specific changes as possible. The 'easy-import' tool on freefall will assist in doing the import, but if there are any doubts on how to go about it, it is imperative that you ask first and not blunder ahead and hope it “works out”. CVS is not forgiving of import accidents and a fair amount of effort is required to back out major mistakes.

Because of the previously mentioned design limitations with CVS's vendor branches, it is required that “official” patches from the vendor be applied to the original distributed sources and the result re-imported onto the vendor branch again. Official patches should never be patched into the FreeBSD checked out version and “committed”, as this destroys the vendor branch coherency and makes importing future versions rather difficult as there will be conflicts.

Since many packages contain files that are meant for compatibility with other architectures and environments that FreeBSD, it is permissible to remove parts of the distribution tree that are of no interest to FreeBSD in order to save space. Files containing copyright notices and release-note kind of information applicable to the remaining files shall not be removed.

If it seems easier, the bmake Makefiles can be produced from the dist tree automatically by some utility, something which would hopefully make it even easier to upgrade to a new version. If this is done, be sure to check in such utilities (as necessary) in the src/tools directory along with the port itself so that it is available to future maintainers.

In the src/contrib/tcl level directory, a file called FREEBSD-upgrade should be added and it should state things like: